Inteligentní technologie jsou dnes rovnocenným partnerem pro život. „Vědí“ o Vás, kdy chodíte do práce, co vaříte a u čeho se bavíte s rodinou. Ale jaká jsou rizika automatizace? Jak vůbec fungují chytré domovy? Skutečně Vám zjednoduší život nebo spíše přivodí noční můru? 5. května tato témata pokryje jednodenní fórum „Packet 1“ v prostorách bývalé pošty na Praze 1. Fórum je určené pro profesionály, kteří mohou získat vstup za 1.500,- bez DPH či studenty, pro něž je vstup zdarma.

[ Facebook Event ]

Jedním z hlavních hostů je Tomáš Poláček, který se automatizaci věnuje od konce devadesátých let a postavil si vlastní “chytrý dům”. Jeho přednáška pokryje tyto okruhy: Standardní a alternativní systémy řízení budov; Drátové a bezdrátové systémy IoT; Vize automatizace okolí uživatele a Osobní velký bratr.

Dále vystoupí architekt Petr Kolář, který využíval rané „home automation“ systémy ve svých stavebních realizacích. Že chytré technologie nepřináší pouze úlevu a zjednodušení života dokáže bezpečnostní expert / hacker Milan Rossa, který se ve své přednášce bude zabývat „odvrácenou stranou“ automatizace a pokusí se proniknout přímo do chytrého systému.

Setkáme se i se studenty průmyslového designu z pražské Vysoké školy uměleckoprůmyslové, kteří ve svých krátkých vstupech představí své vize o moderních nástrojích každodenního života.

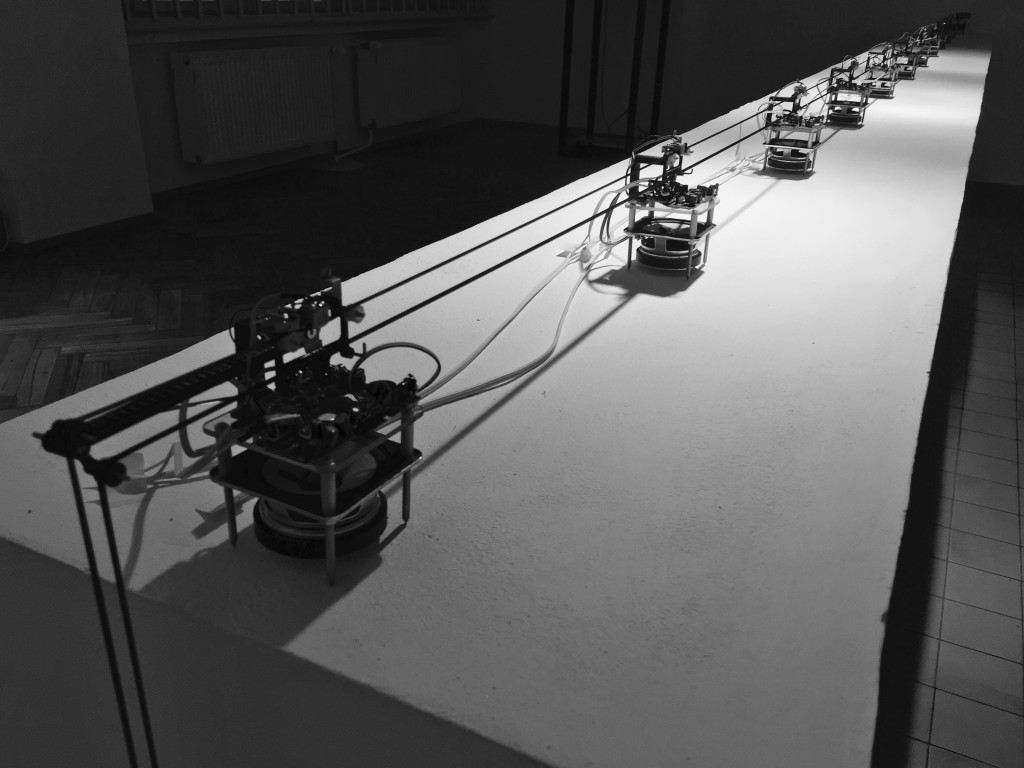

Smysly, tedy senzory inteligentních budov přiblíží Tomislav Arnaudov a Prokop Bartoníček představí internet věcí v kontextu moderního umění.

Packet 1 je koncipován tak, aby účastníkům poskytl „základní balíček informací k danému tématu“. Nezávislý a hlavně pestrý výběr odborníků zajišťuje, že budou představeny nejen hlavní informace z dané problematiky, ale i současné trendy a praktické zkušenosti z oboru. Partnerem akce je společnost specializující se na výrobu elektronických součástek – Finder CZ, s.r.o., která není přímým výrobcem ani distributorem zmíněných technologií.

V loňském roce se Packet 1 zabýval problematikou nových technologií a interaktivním designem v propagaci. Na semináři přednášely mezinárodní špičky oboru např. berlínští designéři Christopher Bauder a Benjamin Maus, kteří prezentovali své cenami ověnčené interaktivní, kinetické a světelné instalace.